Few-Shot Prompting for Classification with LangChain

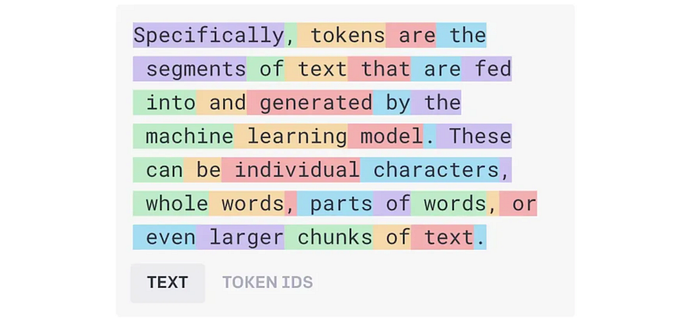

The dominant efficiency use case of Large Language Models (LLMs) is to generate initial chunks of content (be it code or document drafts) that your teams can then evaluate and refine. Behind the scenes, these models are also being refined for use in more specialised applications that require generation of structured outputs. One of these structured output use cases is to provide classification of text into a pre-defined set of categories. An effective way to do this with the model is by providing several examples of what this classification looks like (the so-called few-shot prompting technique), as well as instructions for the expected output structure.

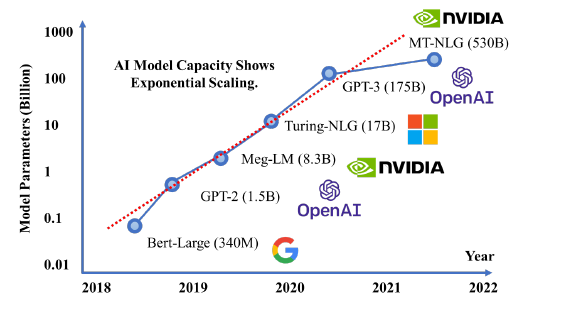

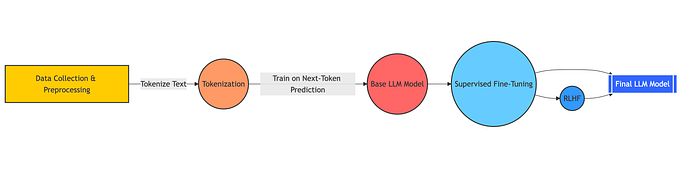

The reason we can do this is that in the training process these models have memorised lots of the semantic content. This is what allows them to produce output that appears to convey understanding. We can argue the fine details of whether this is mere simulacra of understanding, or some inkling of real understanding, but the undeniable truth is that the power of LLMs comes from the fact that they can generate useful internal representations of semantics. These semantic representations are not always perfect, but the general rule appears to be: if a topic is heavily discussed on the internet, then LLMs will have better semantic representations of the entities and the relationships between them for that topic.

GumGum works on building technologies (like the Mindset Graph) that provide advertisers with deep insights into where their brands are being discussed across the internet, and the marketing implications. We are increasingly able to exploit LLMs to help us map the semantics of brands, products, advertising, and media contexts using few-shot prompting. We provide the LLMs with examples of how we want a piece of media content classified into a structured hierarchy, and then prompt it with new examples to classify.

LangChain is a python library that provides an abstraction layer for applying this technique across many different underlying foundation models. In this example, we imagine that we have a database of brands that have been extracted from webpages. We might want to classify each brand into either ‘product’ or ‘service’ to understand differences in advertising for each independently. We could manually label all of this data, or label enough to build a traditional classifier. Alternatively, we can label just enough for validation purposes, and then test an LLM foundation model to see if this knowledge is embedded in its weights. This later approach, requires less labelling (and labelling needs to be done regardless), so we can always test this as our first potential solution.

LangChain allows us to make use of the Pydantic library to define a class that will encapsulate the structure of the data we want back from the model. This will be used both in the prompt template to query the model, and in the output parsing process. Here is the example we will use

from pydantic import BaseModel, Field

class BrandInfo(BaseModel):

brand_type: str = Field(description="Categorisation of brand as a 'product' or 'service'")We need to use one of the LangChain classes to create a parser that expects this structure back, as follows:

from lanchain_core.output_parsers import JsonOutputParser

parser = JsonOutputParser(pydantic_object=BrandInfo)When we construct our prompt template for performing the classification process, we provide both a few examples in the system prompt, as well as a definition of the expected structured response, as follows:

prompt = PromptTemplate(

template = """

Your job is to classify a given brand into either 'product' or 'service'

according to the offering the brand has in market.

For example, the brand 'Samsung' produces a wide range of consumer

electronics products, so it is classified as a 'product' brand.

The brand Gum Gum offers a wide range of advertising services, so it

is classfied as a 'service' brand.

Classify the brand {input_brand}.

{format_instructions}

""",

input_variables=["input_brand"],

partial_variables={"format_instructions":parser.get_format_instructions()}

)LangChain allows us to define a parameterised prompt template that will be populated at execution time. The variables we define inside input_variables tell the prompt what will be filled in with each execution. Whereas the variable partial_variables is used to define the formatting instructions that we populate from the parser object. Without this functionality we would need to manually define a JSON object that tells the model how to structure the response.

In order to use all of this we need to instantiate a client to an LLM service, and then connect it to both the prompt and the output parser. In the code block below we do this using one of the models by Anthropic hosted on Amazon Bedrock:

from langchain_aws import ChatBedrock

region = "us-east-1"

model_id = "anthropic.claude-3-haiku-20240307-v1:0"

client = ChatBedrock(

region_name = region,

model_id = model_id

)

chain = prompt | client | parserNote, that LangChain provides a simple piping syntax for connecting (or chaining) elements together that borrows the Unix pipe syntax. The final executable chain is defined on the last line by piping output from one component into the next. To invoke the model we simply invoke this chain and pass in the brand we want classified:

response = chain.invoke({"input_brand":"MY BRAND NAME"})You will need to check that the response contains the expected JSON elements and create alternative pathways for when it does not. You also need to be aware that not all models support requests for structured responses. Even if the model has been fine-tuned to support this use case it is not guaranteed to work in all instances. At GumGum we benchmark many models to determine which models will reliably return data with both the right structure and accurate responses. The results vary dramatically across foundation models, and appear to be very sensitive to the particular task you are asking of them. This is likely reflecting differences in the training data and the subset of world knowledge the models have each managed to memorise.

Conclusion

The advantage of using LangChain to build an LLM few-shot classification application is that you get clean and easily extensible code. You do not need to manually create and manipulate the JSON configuration files, and you can easily swap out different foundation models running on different services. We have successfully used this approach across Amazon Bedrock, OpenAI and the HuggingFace API services. We can quickly determine if few-shot prompting will be sufficiently accurate for a given use case, and which of the foundation models provides superior results. In the emerging competitive landscape of AI foundation models it is critical to have a code base that does not leave your organisation at the mercy of a single vendor.